The variational method is an approximate method used in quantum mechanics. Compared to perturbation theory, the variational method can be more robust in situations where it is hard to determine a good unperturbed Hamiltonian (i.e., one which makes the perturbation small but is still solvable). On the other hand, in cases where there is a good unperturbed Hamiltonian, perturbation theory can be more efficient than the variational method.

The basic idea of the variational method is to guess a ``trial'' wavefunction for the problem, which consists of some adjustable parameters called ``variational parameters.'' These parameters are adjusted until the energy of the trial wavefunction is minimized. The resulting trial wavefunction and its corresponding energy are variational method approximations to the exact wavefunction and energy.

Why would it make sense that the best approximate trial wavefunction is the one with the lowest energy? This results from the Variational Theorem, which states that the energy of any trial wavefunction ![]() is always an upper bound to the exact ground state energy

is always an upper bound to the exact ground state energy ![]() . This can be proven easily. Let the trial wavefunction be denoted

. This can be proven easily. Let the trial wavefunction be denoted ![]() . Any trial function can formally be expanded as a linear combination of the exact eigenfunctions

. Any trial function can formally be expanded as a linear combination of the exact eigenfunctions ![]() . Of course, in practice, we do not know the

. Of course, in practice, we do not know the ![]() , since we are assuming that we are applying the variational method to a problem we can not solve analytically. Nevertheless, that does not prevent us from using the exact eigenfunctions in our proof, since they certainly exist and form a complete set, even if we do not happen to know them:

, since we are assuming that we are applying the variational method to a problem we can not solve analytically. Nevertheless, that does not prevent us from using the exact eigenfunctions in our proof, since they certainly exist and form a complete set, even if we do not happen to know them:

|

(15) |

| (16) |

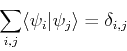

We are asuming that the physical states are normalized, i.e. their norm is equal to unity (we can always make it to do so).

Let us assume that we have a candidate wavefunction to describe the ground-state, that we call ![]() , and that this function deppends on a set of parameters

, and that this function deppends on a set of parameters ![]() , that we call variational parameters and are complex numbers.

Ignoring complications involved with a continuous spectrum of H, suppose that the spectrum is bounded from below and that its greatest lower bound is

, that we call variational parameters and are complex numbers.

Ignoring complications involved with a continuous spectrum of H, suppose that the spectrum is bounded from below and that its greatest lower bound is ![]() .

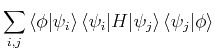

So, the approximate energy corresponding to this wavefunction is

the expectation value of

.

So, the approximate energy corresponding to this wavefunction is

the expectation value of ![]() :

:

|

(17) | ||

|

(18) |

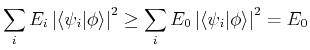

In other words, the energy of any approximate wavefunction is always greater than or equal to the exact ground state energy ![]() . This explains the strategy of the variational method: since the energy of any approximate trial function is always above the true energy, then any variations in the trial function which lower its energy are necessarily making the approximate energy closer to the exact answer. (The trial wavefunction is also a better approximation to the true ground state wavefunction as the energy is lowered, although not necessarily in every possible sense unless the limit

. This explains the strategy of the variational method: since the energy of any approximate trial function is always above the true energy, then any variations in the trial function which lower its energy are necessarily making the approximate energy closer to the exact answer. (The trial wavefunction is also a better approximation to the true ground state wavefunction as the energy is lowered, although not necessarily in every possible sense unless the limit ![]() is reached).

is reached).

Frequently, the trial function is written as a linear combination of basis functions, such as

This leads to the linear variation method, and the variational parameters are the expansion coefficients ![]() .

We shall assume that the possible solutions are restricted to a subspace of the Hilbert space, and we shall seek the best possible solution in this subspace.

.

We shall assume that the possible solutions are restricted to a subspace of the Hilbert space, and we shall seek the best possible solution in this subspace.

The energy for this approximate wavefunction is just

![\begin{displaymath}E[\phi] = \frac{\sum_{ij} c_i^* c_j H_{ij}}{\sum_{ij} c_i^* c_j S_{ij}}. \end{displaymath}](img40.png)

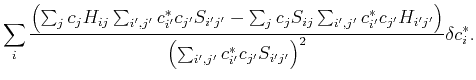

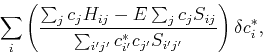

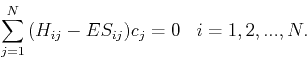

In order to find the optimial solution, we need to minimize this energy functional with respect to the variational parameters ![]() , or calculate the variation such that:

, or calculate the variation such that:

| (20) |

|

(21) |

|

(22) |

|

(23) |

| (24) |

|

(25) |

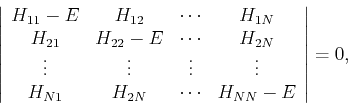

If an orthonormal basis is used, the secular equation is greatly simplified because

![]() (1 for

(1 for ![]() and 0 for

and 0 for ![]() ), and we obtain:

), and we obtain:

| (26) |

|

(27) |

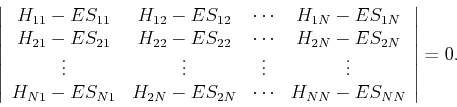

In either case, the secular determinant for ![]() basis functions gives an

basis functions gives an ![]() -th order polynomial in

-th order polynomial in ![]() which is solved for

which is solved for ![]() different roots, each of which approximates a different eigenvalue.

These equations can be easily solved using readily available library routines, such as those in Numerical Recipes, or Lapack.

At this point one may wonder where the approximation is: aren't we solving the problem exactly? If we take into account a complete basis set, the answer is ``yes, we are solving the problem exactly''. But as we said before, teh Hilbert space is very large, and we therefore have to limit the basis size to a number that is easily tractable with a computer. Therefore, we have to work in a constrained Hilbert space with a relatively small number of basis states kept, which makes the result variational.

Because of the computer time needed for numerical diagonalizations scales as the third power of the linear matrix size, we would want to keep the basis size as small as possible. Therefore, the basis wavefunctions must be choses carefully: it should be possible to approximate the exact solution to the full problem with a small number of basis states. Inorder to do that, we need some good intuition about the underlying physics of the problem.

different roots, each of which approximates a different eigenvalue.

These equations can be easily solved using readily available library routines, such as those in Numerical Recipes, or Lapack.

At this point one may wonder where the approximation is: aren't we solving the problem exactly? If we take into account a complete basis set, the answer is ``yes, we are solving the problem exactly''. But as we said before, teh Hilbert space is very large, and we therefore have to limit the basis size to a number that is easily tractable with a computer. Therefore, we have to work in a constrained Hilbert space with a relatively small number of basis states kept, which makes the result variational.

Because of the computer time needed for numerical diagonalizations scales as the third power of the linear matrix size, we would want to keep the basis size as small as possible. Therefore, the basis wavefunctions must be choses carefully: it should be possible to approximate the exact solution to the full problem with a small number of basis states. Inorder to do that, we need some good intuition about the underlying physics of the problem.

We have used a linear parametrization of the wave function. This greatly simplifies the calculations. However, nonlinear parametrizations are also possible, such as in the case of hartree-Fock theory.

The variational method lies behind hartree-Fock theory and the configuration interaction method for the electronic structure of atoms and molecules, as we will see in the following chapter.